Turn Support Hunches into Usable Data for Product Teams

Frontline support teams talk to customers all day every day, so they know which areas of a product trip people up. That information is an incredible resource for the people designing and building the products, but to make the most of it requires backing up anecdotes and hunches with data.

Collecting the right data

If you're a nerd like me, pulling in data is great fun, but you need to be sure that the information you’re gathering will be achieve what you need it to. Think about why you are gathering the data: How do you want to use it? To inform? To persuade? To measure progress?

Remember the customer

I remember being super proud of some very snazzy charts that I made for an all-hands meeting, showing help desk volume and reply times. I presented my colorful, easy-to-understand data, everyone nodded politely, and we moved on. The conversation turned to a new feature — we were discussing whether to add a new question type to our software. I immediately chipped in: "Yes! So many customers have asked me for that!"

Our CEO turned to me and asked, "How many customers?" After some scrabbling, I found a spreadsheet counting feature requests and gave him the answer. “OK, but how old is that information, do people still want to use the product that way? How many requests have we had in the last couple of months?”

I was completely stumped.

I’d focused on using data as a means to report on team performance and hadn’t considered that it was just as important to use data to advocate for customer needs.

Categorize feedback

My first professional support role was in a small startup. We were a team of 10 people creating, selling, and supporting ideation software to organizations big and small. Product decisions were discussed and agreed on by myself, the lead engineer, the CEO, and the head of sales. To make a case for changing a feature, I had to accurately describe the problem and demonstrate the impact it had on users.

To do that, I needed to be able to summarize and present insights from user conversations. I wanted a way to track why users got in touch with us and which areas of the product they were talking about. I didn’t get that right overnight, it took me a few tries to find a format that gave me the detail I needed.

To begin with, the support team tagged incoming conversations with the related feature: account settings, content management, etc. This provided me with a snapshot of the different areas of the products that confused or frustrated customers. This was a great first pass at the data, but I wanted to know more than which areas customers needed help with; I wanted to know why they were reaching out.

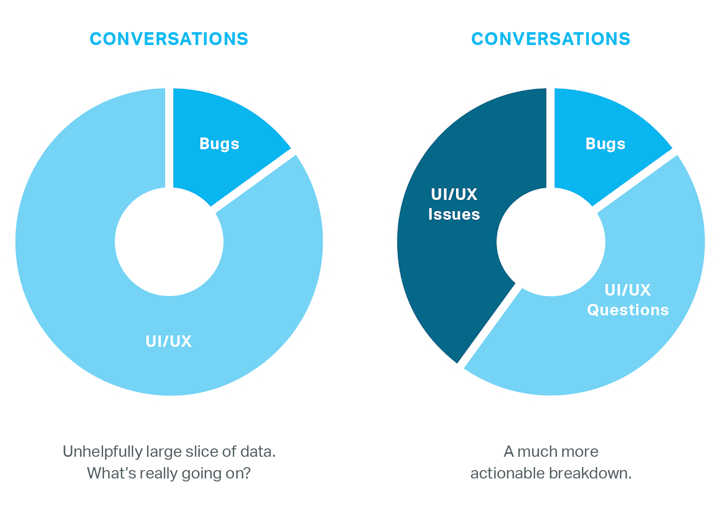

With that in mind, we started to not only tag conversations, but also to categorize the reasons why users were contacting us. Initially, there were two conversation categories: bugs and UI/UX questions. With these categories, I could start to ask more detailed questions of the data: Which features are surfacing the most bugs? Which features are users most likely to need some help setting up?

I was pretty happy with the story that I could tell with this combination of feature tags and categories. There were times, though, when users were frustrated by the software acting in really unexpected ways. These issues weren’t strictly bugs, but they were more than just a question about how to do something.

I noticed that there were actually two distinct types of "question" tickets: “How do I...?” questions and UI/UX issues, where the interface or workflow of the product worked against the user to confuse them or cause them issues. For example, a question might be “How do I add a field on this page?” while an issue would be “I tried to add a field and it deleted the one I’d already set up!”

UI/UX questions — a question that’s best addressed through customer education in support or documentation, and that doesn’t require any product changes.

UI/UX issues — customer confusion that could be addressed through improvements in the product design and function.

Bugs — issues where the product isn’t working as intended.

Understanding the difference between these categories allowed me bring a more informed voice to product conversations. I could push more effectively for change when I was able to clearly show which areas customers understood and were able to use, but still found confusing or complicated, as opposed to areas where we just needed to educate our customers once and then it all made sense for them.

Interpret the data

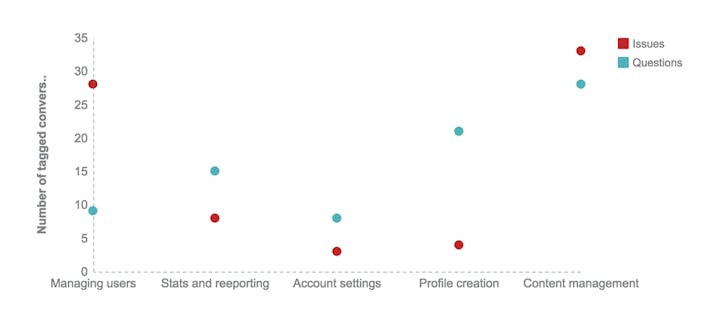

The conversation categories and tags worked together to paint a fuller picture of how users were engaging with the product. Take a look at the chart below:

If you look at the types of conversations that were tagged with the Managing users feature, there are quite a lot of UI/UX issues, but not many questions — this tells me that on the whole the feature is easy enough to understand, but that the way it works just doesn't make sense to many users.

On the flip side, we didn’t receive many issues related to Profile creation, but we did get a lot of questions. This suggests that we need to better guide users through the process, or provide more tooltips.

The chart also shows that there were both a lot of UI/UX issues and questions tagged with the Content management feature. This means that it is a real problem area for users, so it would be a good idea to review how the feature works and how we’re helping users to understand it.

Combining the tags and ticket categories gave me a powerful data set that I used to bring the voice of the customer to product discussions. I could prove which bugs needed prioritizing, where we needed to change on-screen copy, and which features needed to be updated.

Beyond tagging

Conversations give you a good indication of why people are getting in touch, but what about those users who don’t reach out to you? How do you know what people are having trouble with if they don’t contact your support team?

Use the knowledge base to identify problems

Two metrics that I’ve found useful for improving product UI are "popular search terms" and “most viewed pages.” In one case, we found that the help guide for a rarely used feature was receiving a disproportionate number of views. As a result, we changed some of the on-screen instructions for that feature and put in a few more tooltips. The next month, the help guide went from being the 5th most viewed page in the help docs to being the 15th, letting us know that we’d done the right thing!

Embed support staff with product teams

Having a member of your support team embedded with the product team can provide valuable insight and help to keep new features on track.

In the early stages of designing a new piece of a product, the support team has the most reliable picture of how users use the product. This is especially useful in smaller product teams that don’t have the resources for in-depth or complex user research. Support team members can tell you the most common tasks that users perform on particular pages, the language that customers use, and other apps and software that they enjoying using.

As the design progresses, having a regular check-in with a member of your support team keeps the end user front of mind.

For example, a colleague shared an iteration of new function that gave administrators a new way of grouping their users. He called the new function Automatic Groups, because users would automatically be added to groups based on their answer to a profile question, and group membership would be updated if they changed their answer. It was a great addition to the product, but I was worried that the name could cause some confusion. I knew that our users often talked about "automatic groups" when they described an existing way of managing groups; it wasn't the official name for it, but it was what people used when talking about the feature. With this in mind, we came up with the new name: Dynamic Groups. Both the feature and the name were well received, and I was confident that we had saved ourselves and our users a lot of time and energy!

Support as a QA resource

Quality Assurance testing is another area where input from a customer-facing team is important. After the initial bugs are worked out, it’s good to have someone cast a "customer eye" over finished code before it ships.

In the past, I’ve managed to “break” new features in testing because I used them in ways for which they weren’t designed. I knew that users would often try workarounds to get what they needed from the product, so that’s exactly how I tested new features. This approach meant that I could find holes that the product team hadn’t been looking for as they tested. Involving your support team in product testing can make your product more robust.

Support can actively improve products

Support will always have a role to play in shaping the future of a product; being the closest to customers is an invaluable resource. Use data to make your voice heard, get involved early in the design process, and get your hands dirty during testing. Your product team and your users will thank you for it.